research

Robot Perception and Learning (RoPAL).

I have broad experience in robotics, including indoor mobile robots, industrial robot manipulators, humanoid robots, and outdoor unmanned autonomous surface vehicles. My research is primarily focused on the Robot Perception And Learning (RoPAL), covering a broad range of subjects, including simulataneous localisation and mapping (SLAM), robot vision, robot reinforcement learning, tactile sensing, deep learning, and their applications on autonomous robot navigation, manipulation and, more broadly, smart manufacturing.

We envisage an enhanced robot cognitive capability through deploying advanced multi-modal perceptual capabilities from sensors of vision, tactile, lidar, etc, and continuous self-learning to understand the environment in 3D, predict situations via machine/deep learning, and support humans for real-world problems. We aim to endow a higher level of autonomy to robots and unmanned systems, providing capabilities of advanced situation awareness, multi-modal sensing, robot active learning, localisation and mapping, optimal path planning, and multiple robot collaboration.

We encourage cutting edge research with endless possibilities, and also aim at practical solutions driven by real-world industrial applications.

My group’s research interests can be loosely divided into the following areas:

- Deep Reinforcement Learning for robot navigation and manipulation

- State Estimation, e.g. Visual SLAM and Visual Odometry

- Sensor-guided autonomous robot navigation and manipulation

- Multi-modal robot sensing, such as robot vision, tactile sensing, etc.

- Computer Vision and Data-driven learning-based methods for smart manufacturing, including in-situ monitoring, surface 3D metrology, robotic assembly and so on

Sensor-guided Autonomous Navigation and SLAM

To endow a higher level of autonomy to mobile robots, providing capabilities of advanced intelligent situation awareness, localisation and mapping, depth estimation, environment understanding in 3D, path planning for active exploration, and multiple robot collaboration, through the deployment of advanced perceptual capabilities, such as vision, lidar, and other sensors.

Some recent research subjects of interest include deep learning-based depth estimation, mapless navigation with reinforcement learning/active learning, and reflection-aware visual SLAM that utilises ubiquitous reflections to improve the SLAM performance.

We encourage implementing ideas on hardware robot platforms for real-world experiments to deal with dynamic and unstructured environment, and aim at deploying robots in industrial and domestic settings.

Vision-guided robot manipulation and Deep reinforcement learning

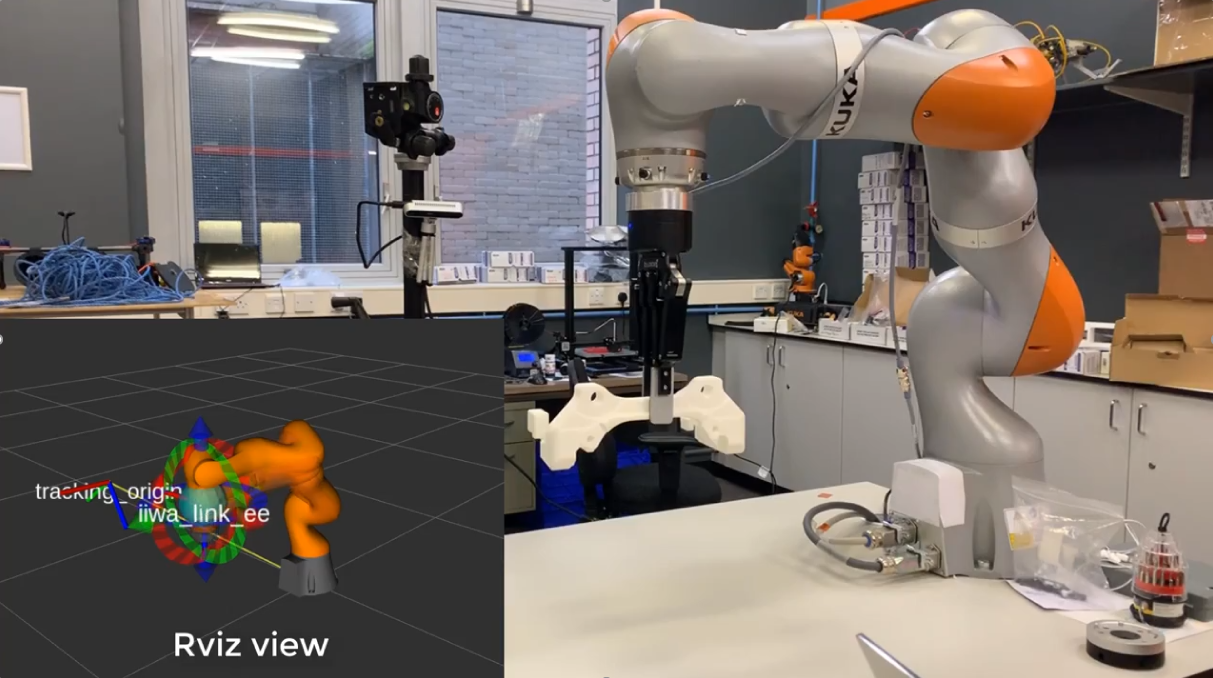

This work aims to develop robust and reliable vision-guided manipulation solutions to allow the robot manipulator to identify, localise, grasp, and place an object in an unstructured cluttered environment. We have been actively collaborating with industry in this area.

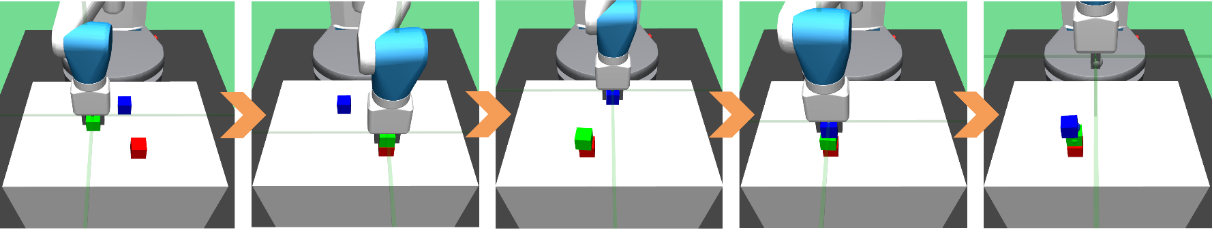

Reinforcement Learning is a new emerging area that paves a new direction to allow robots to learn new skills for situations when hand-coding is not suitable. The current work aims to research and develop a self-learning system for a robot to manipulate objects in cluttered workspace for tasks that require multiple steps. It is challenging in that such tasks present high sparsity in obtaining rewards to allow reinforcement learning to succeed.

We are interested in understanding and bridging the gap between real-world problems and laboratory-based research.

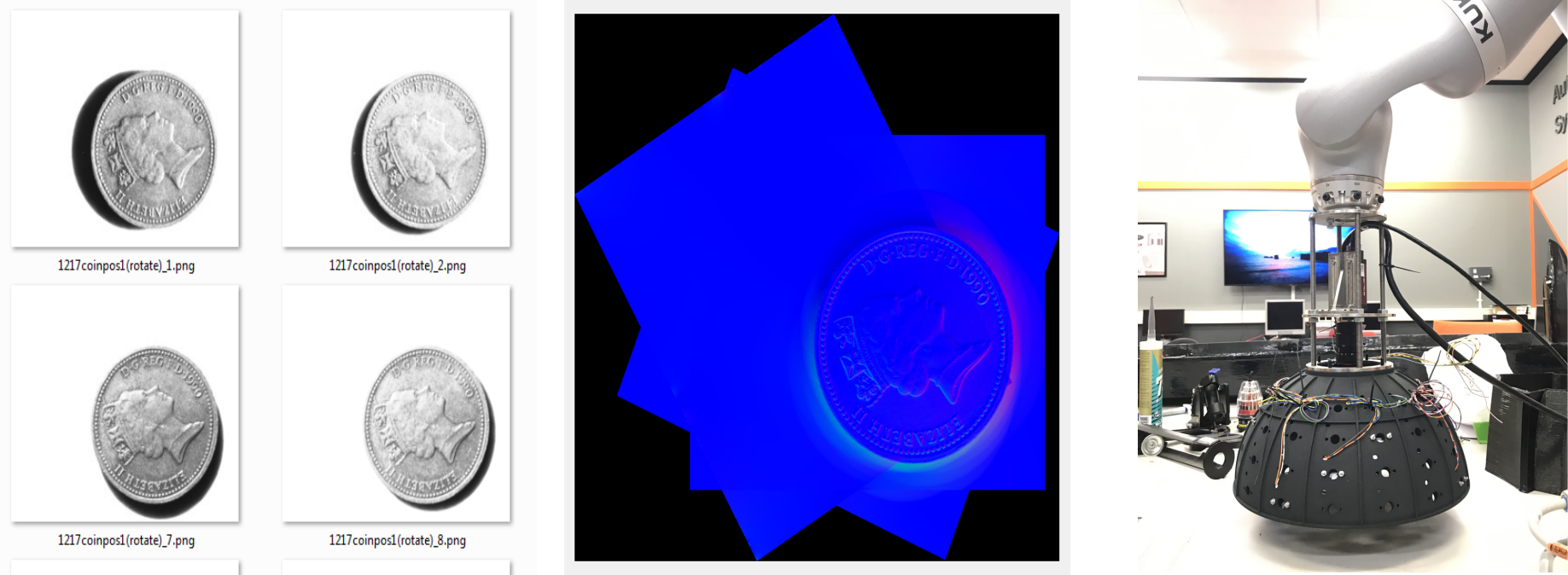

Automated robot-based 3D surface imaging for large-scale surface metrology

We have developed a robot-based photometric stereo image acquisition system.

Reconstructing the profile of a 3D surface has wide applications in reverse engineering, computer-aided medical diagnosis, in-situ defect detection for manufacturing processes, etc. Vision-based solutions have the advantages of being low cost, non-destructive, and with easy implementation. This project aims to explore solutions integrating advances in imaging techniques, artificial intelligence, and robot manipulation for efficient, large scale, and high resolution 3D surface reconstruction.

Autonomous Collaborative Vehicles (ACV)

As part my teaching activities (Mechatronics module), I have led teams of students in the past years to build USVs (Unmanned Surface Vehicle) and UAVs. Related research includes robot behaviours, such as computer vision and sensor fusion for collision avoidance, vehicle collaboration, situation awareness and action planning. We have been working on using a USV as the carrier of the UAV such that the UAV could land on the USV autonomously with multi-sensor-base tracking.

Data-driven fault diagnosis and self-validating sensors

I am currently involved in multi-discplinary research collaborating with manufacturing and mechanical engineering researchers for real-world problems using data-driven solutions.

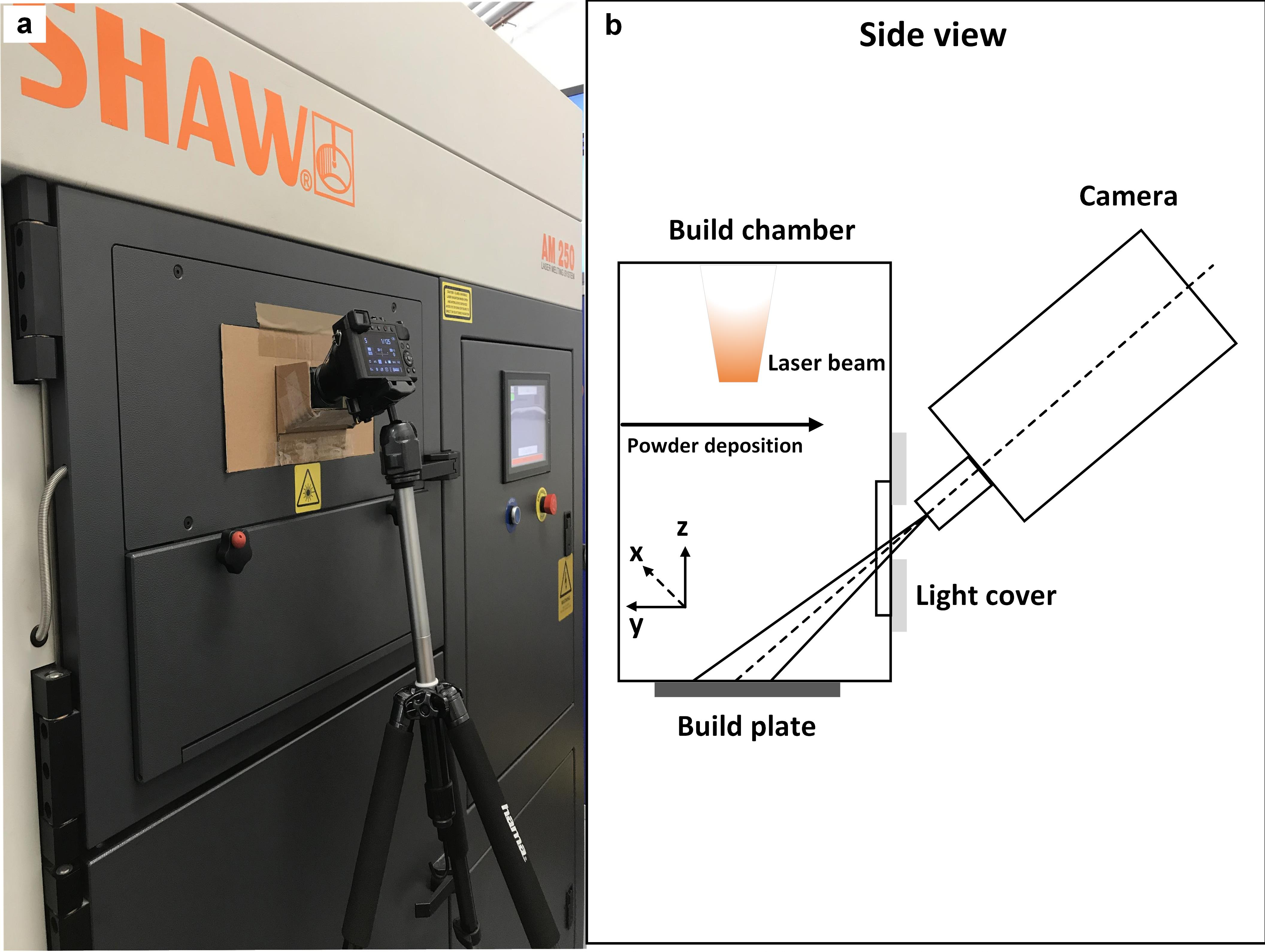

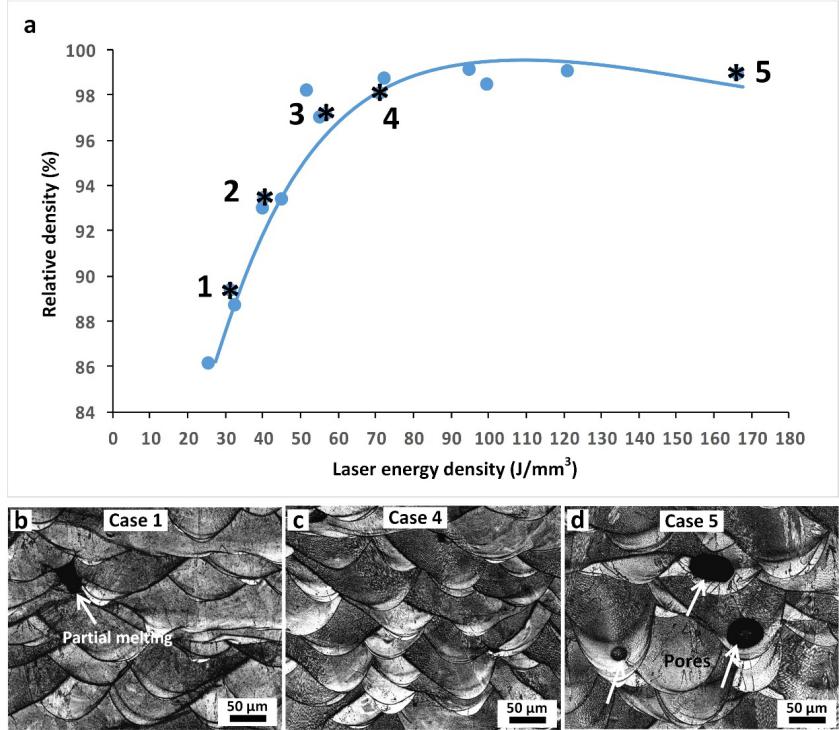

In selective laser melting (SLM), spattering is an important phenomenon that is highly related to the quality of the manufactured parts. Characterisation and monitoring of spattering behaviours are highly valuable in understanding the manufacturing process and improving the manufacturing quality of SLM. This work develops a method of automatic visual classification to distinguish spattering characteristics of SLM processes in different manufacturing conditions. More imagery data using different technologies are also being investigated.

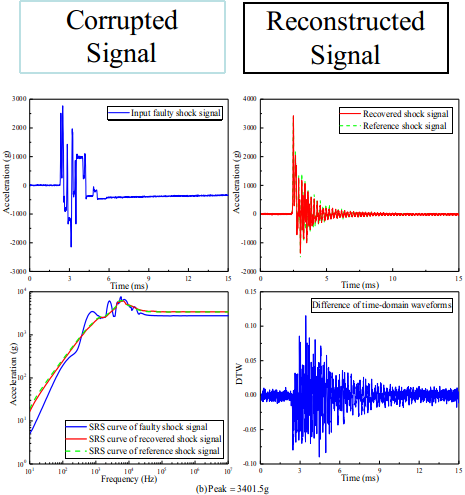

Another recent work is a data-driven approach to automatically “Self-Validate”, “Self-Diagnose”, and “Repair” corrupted signals from faulty accelerometers using Deep Learning-based methods.